I’m writing this partially for myself so I have a reference for what’s wrong while trying to install Django in a Python virtual environment on OS X. I’m just going to write about errors I encountered I think.

Create the virtualenv

In the directory where I wanted to put the virtual environment type:

virtualenv django-ve

where django-ve will be the virtual environment. It should exist first.

Activate it:

cd django-ve

source bin/activate

Install Django

Following Django’s own guide still use pip to install it:

pip install Django

But when I did this I got an error with the SSL connection to PyPy exactly as in, this Stack Overflow post. The solution was as they describe in the accepted answer though you also have to update pip both inside the new virtual environment and outside. (I first tried updating it, then recreating the virtual environment, but this didn’t help, which kind of makes sense if it fetches a new pip each time.)

Install the database drivers

Since I want to use a full MySQL database I needed to install the Python connectors. I stuck with the Django recommended way. I tried to follow their instructions.

When I tried to install mysqlclient I got this same error. Updating Wheels as per one of the suggestions didn’t help, but running

xcode-select --install

did fix the problem by installing the Apple provided developer command line tools which install a C compiler.

Verify?

I verified that the Python virtualenv could see Django as instructed and it could.

At this point, the setup guide ended so I’m just proceeding along with the tutorial.

Tutorial

I continued through the tutorial pretty well OK up until the part about configuring timezones. Silly me, I wrongly assumed I could modify it from ‘UTC’ to ‘AEST’, but even as is linked in their own guide, it’s based on the TZ Database so I should use ‘Australia/Queensland’

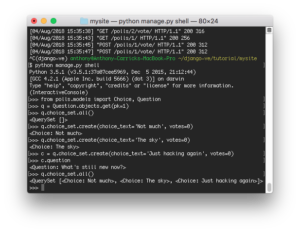

Then proceeding along and running

python manage.py migrate

runs successfully and going back to MySQL Workbench I can see all the tables that Django created. Looking good so far.

By this stage I’ve set it up and worked connect it to a real database, so that’s enough for now. I may come back later and critically evaluate Django for my purposes.